[Japanese]

When observing ant colonies, bee swarms, or human societies, it’s remarkable how order and equilibrium emerge, despite each individual acting independently. A system where multiple entities (agents) autonomously make decisions and influence one another to achieve a common goal is called a multi-agent system. From an engineering perspective, decentralized autonomous systems can also be considered an example of multi-agent system.

Our lab is studying broad multi-agent systems, focusing on the mechanisms by which order and equilibrium emerge from individual agent behavior and their engineering applications. Our work is characterized by interdisciplinary research based on various theories and methods, such as mathematical logic (formal methods), game theory, distributed algorithms, and distributed optimization. Our current main research topics include the following.

Formal methods apply logic and provide a mathematically rigorous way to verify (prove) that software specifications are implemented as intended by the programmer. Our lab studies formal methods applied to various systems, particularly decentralized autonomous network systems. One such project investigates the fault tolerance of epistemic gossip protocols.

Gossip protocols are used in peer-to-peer networks to enable all nodes to share information through one-to-one communication between adjacent nodes. Research on gossip protocols dates back to the 1970s with the study of the "gossip problem," which asks how many calls are required for everyone in a group to learn everyone else’s secrets through pairwise communication. Numerous gossip protocols have been developed following this theoretical work. Recently, epistemic gossip protocols have been proposed, where nodes deterministically select communication partners based on their knowledge states. In these protocols, each node autonomously determines its communication partner, inferring the knowledge states of others to minimize unnecessary communication.

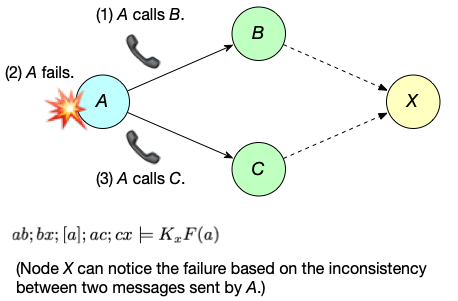

Previous research on epistemic gossip protocols primarily focused on identifying efficient protocols for specific network structures. However, applying these protocols in real systems requires ensuring reliability against various failures in nodes and communication paths—a topic that has received little attention. In response, our lab proposed epistemic gossip protocols that is resilient to failures, including cases where nodes lose secret information obtained through past communications. By detecting inconsistencies in messages from multiple paths, our protocol allows nodes to infer failures and retransmit lost information, ultimately enabling all nodes to share everyone’s secrets.

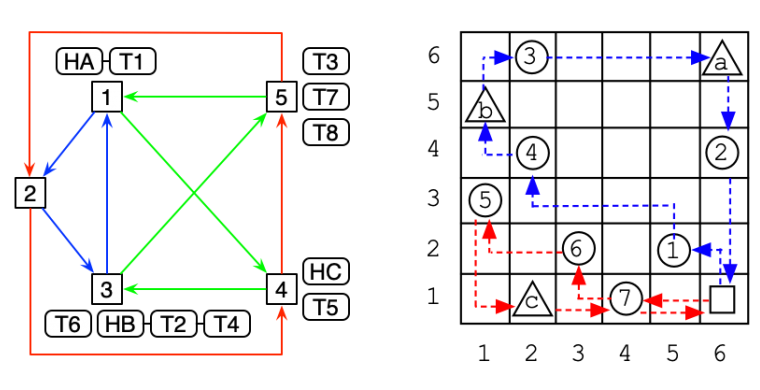

In addition, our lab conducts research on scheduling methods using model checking and security protocol verification through logical reasoning. We also explore topics in distributed systems, such as power-saving techniques and access control in large-scale distributed storage systems.

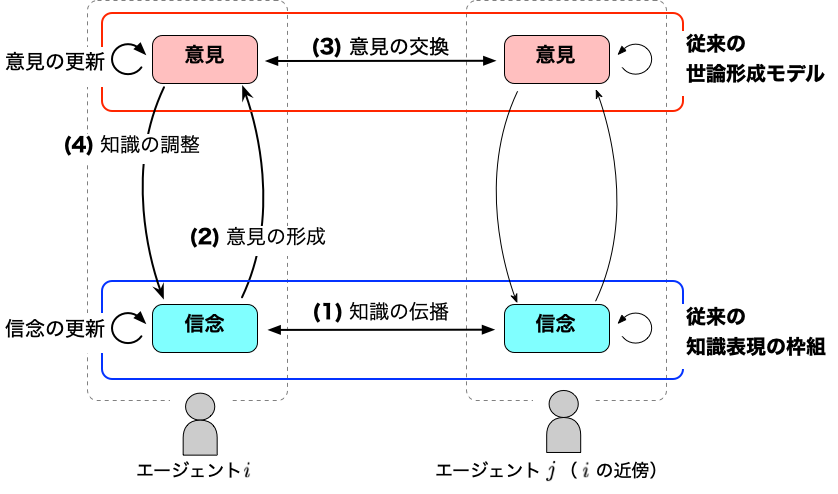

Our lab conducts social analysis using agent-based approaches. Particularly, we are studying how interactions between beliefs and opinions lead to the formation of public opinion. This research is conducted in collaboration with research team mOeX led by Dr. Jérôme Euzenat at INRIA Grenoble Rhône-Alpes, France.

Many previous studies on opinion dynamics have modeled how individual opinions on a topic are influenced by neighboring agents, leading to public opinion formation. However, in reality, opinions are not formed solely based on the expressed views of others, but also on the knowledge acquired from the environment. Our research aims to build an opinion formation model that incorporates knowledge representation through mathematical logic, clarifying the role of knowledge in opinion formation. We particularly focus on the relationship between the spread of misinformation and the formation of public opinion, examining phenomena such as the emergence of extreme political claims by conspiracy theorists and the polarization of public opinion due to echo chambers, as observed on social media.

In addition, we consider board games and card games as social models and study various phenomena that emerge from collective behavior in these games. One of our studies focuses on the emergence of play styles in games.

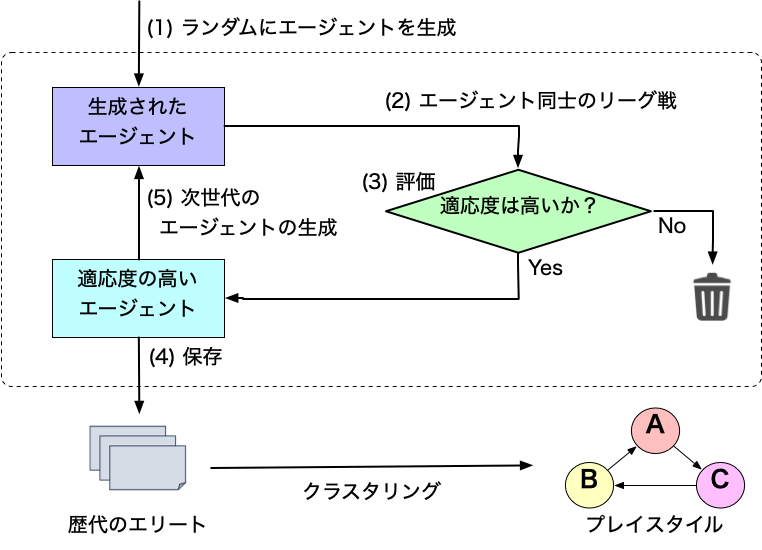

In recent years, research and development of agents that play video games or board games with distinctive play styles have gained attention. Many of these studies involve predefining a specific play style and having the agent learn using a reward function tailored to that style. However, this method makes it difficult to discover unknown play styles. Our research aims to build a framework that can automatically generate multiple play styles simultaneously, without prior assumptions, using evolutionary computation. Specifically, we represent characteristic behaviors in game playing as genes, have agents compete against each other, and repeatedly evolve the survivors by breeding them. By clustering the features of the most adaptive agents, we can ultimately identify play styles that are well-suited to the game.

Using this framework, we analyze how the generated diverse play styles influence the progression of multi-player games that require complex strategies and how different play styles interact with each other. Furthermore, as an application of the results obtained, we are conducting research to reveal the impact of group diversity on overall utility, such as Pareto optimality.

In addition to these studies, we are developing agents that excel at playing various board and card games, using approaches such as reinforcement learning, game theory, and logical reasoning.

A Distributed Constraint Optimization Problem (DCOP) involves multiple agents in a network collaborating to find the optimal set of variable values while adjusting their own variables. This type of problem is used to model multi-agent systems where agents need to cooperate, such as in the control of sensor networks composed of numerous sensors.

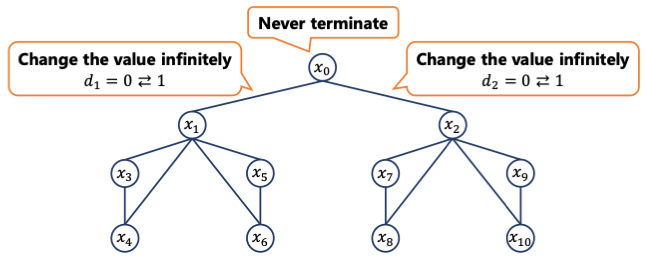

One of the representative algorithms for solving DCOP is ADOPT (Asynchronous Distributed OPTimization). ADOPT is known for its two important properties: termination (the algorithm stops in finite time) and optimality (the solution is optimal when it stops). Successor algorithms based on ADOPT have been thought to retain these properties. However, our lab has demonstrated counterexamples that show ADOPT and its successors do not always guarantee termination and optimality. We identified the cause of these counterexamples and proposed a revised ADOPT algorithm, proving both termination and optimality in the revised version.

Our lab is also studying how to make DCOP algorithms more reliable. While many algorithms have been proposed for solving DCOPs, most implicitly assume that all agents are functioning correctly. However, in real systems, some agents may fail due to malfunctions, potentially leading to suboptimal results if existing algorithms are used. Our lab is developing distributed constraint optimization algorithms that are robust against agent and network failures.

Additionally, We are also developing a framework that combines reinforcement learning with distributed constraint optimization to enable collaborative behavior among agents.